This portfolio outlines my contributions to the following project.

PROJECT: Anakin

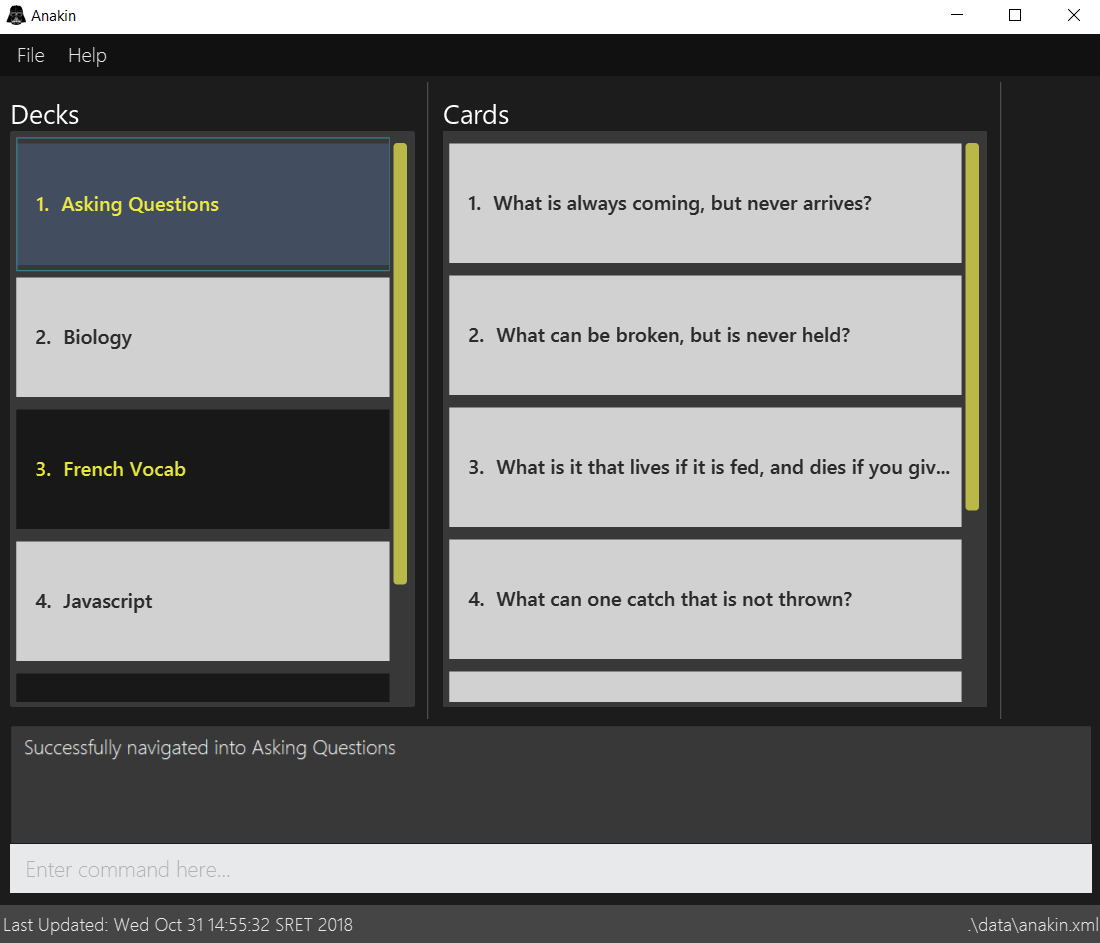

Anakin is the result of our search for a better command line application to revise for exams. From August 2018 to November 2018, Team T09-2 comprising of myself, Tay Yu Jia, Foo Guo Wei, David Goh, and Nguyen Truong Thanh built Anakin as part of a Software Engineering module (CS2103T) conducted at the National University of Singapore.

Overview

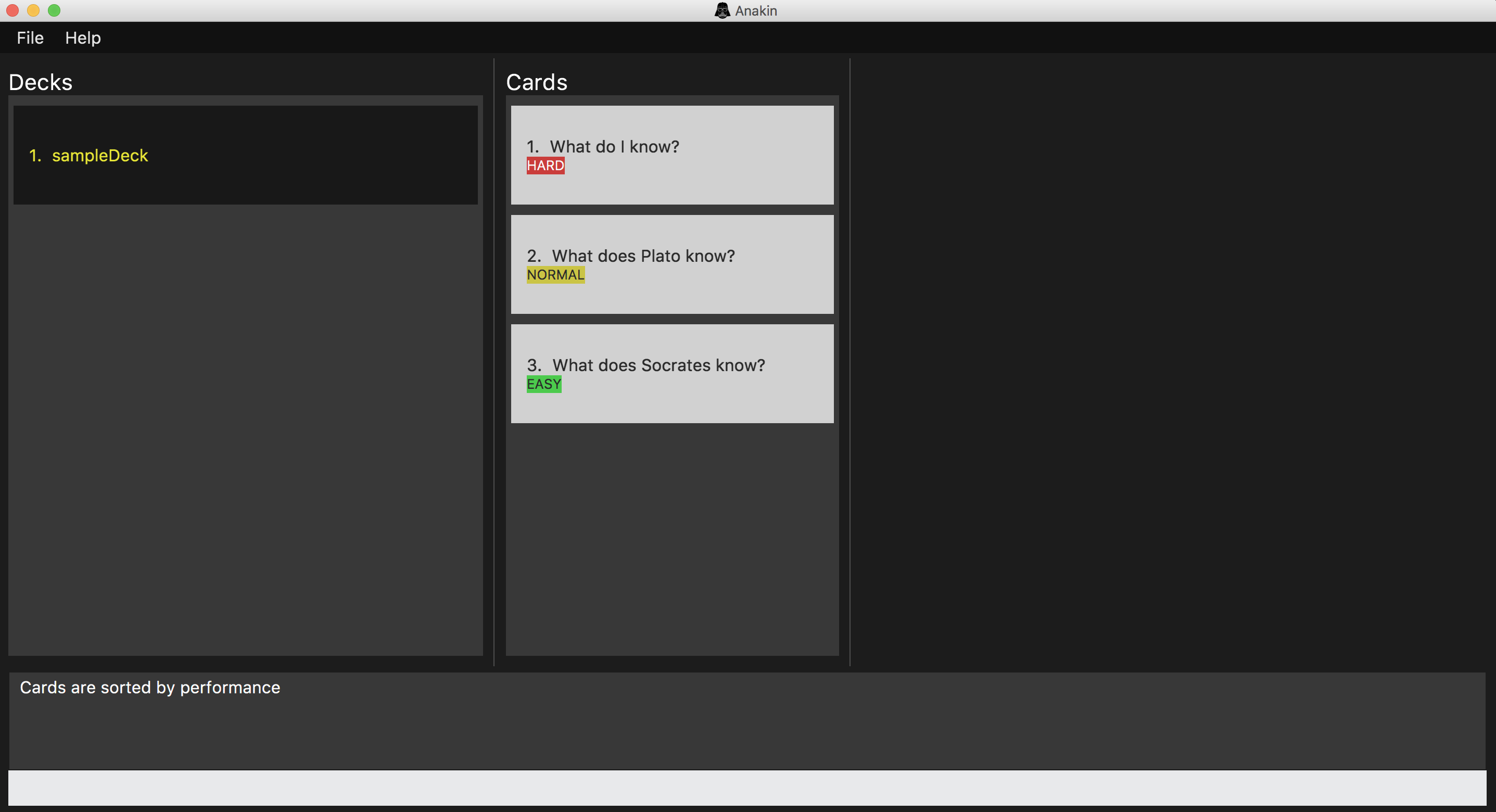

Anakin is a desktop-based flashcard application which makes remembering things easy. The user

interacts with the application primarily using a Command Line Interface. However, the user can fall back to the Java FX GUI if needed. Anakin uses Super Memo 2 which is the spaced repetition algorithm used by popular language learning tools such as

Duolingo, Wani Kani, and Memrise.

Anakin aims to revolutionize the studying experience of the technically advanced user. On top of being easy to use, Anakin is also environmentally friendly, having zero paper wastage, unlike conventional flashcards.

|

What is spaced repetition? |

It is written in Java, and has about 10 kLoC.

-

Main features:

-

Create decks of flashcards easily

-

Review your flashcards on the go

-

Track your performance for all flashcards

-

Import and export decks

-

Summary of contributions

This section acts as a summary of my contributions to project Anakin.

-

Major enhancement: I added autocompletion for commands

-

What it does: Helps a user complete a partially typed command

-

Justification: This feature improves the product significantly because a user will want to be able to finish expressing the command he wants to type with a simple press of <TAB>

-

Highlights: This enhancement removes the need for the user to memorise long strings of command syntax. While typing in the command box, the user is saved from the hassle of checking the user guide for commands he/she has forgotten. This will allow for a better user experience and in turn easier studying for tests.

-

-

Major enhancement: I added the scheduling algorithm at the core of the product together with the

rankcommand-

What it does: allows the users to sort cards by performance during review. Cards which the user has performed poorly on will be pushed to the top of the deck.

-

Justification: This feature improves the product significantly because a user will want to review cards which he or she performed poorly on to increase the yield of her revision.

-

Highlights: This allows user to focus on what’s important to review and makes for more efficient study sessions.

-

-

Minor enhancements:

-

Added

helpcommand which allows user to seek help when he/she is lost. -

Implemented the

editdeckcommand that allows users to update the name of their deck. -

Added the

exitcommand to allow the user to leave the deck -

Added the

selectcommand which eventually formed the basis for other commands to be developed. -

Added

undoandredocommands

-

Code contributed: Github Commits, Reposense

-

Other contributions:

-

Project management:

-

Set up Continuous Integration

-

Update contact us page(Pull Request: #68)

-

Added initial documentation for team README(Pull Request: #23)

-

Integrate Reposense for code contribution tracking(Pull Request: #75)

-

Configure naming convention of files and packages across the project to comply with project style guide(Pull Requests: #38 , #106)

-

-

Enhancements to existing features:

-

Wrote extensive system tests which played a major role in boosting coverage from 66% to the above 90%. The tests I have written are detailed below:

-

Wrote integration tests and tests for help command(#148)

-

Wrote Unit Tests for editdeck(Pull Request: #121)

-

Wrote tests for EditCard, EditDeckParser, and GUI(Pull Request: #157)

-

Wrote System Tests for ClearCommand, FindCommand, Logic Manager, EditDeck, NewDeck(Pull Requests: #158, #165, #238, #242, #244)

-

-

-

Documentation:

-

Community:

-

Pull Requests reviewed(with non-trivial review comments): #189 #195 #271

-

Discovered and reported bugs to peers: List of created issues

-

-

Contributions to the User Guide

Given below are sections I contributed to the User Guide. They showcase my ability to write documentation targeting end-users. |

Autocompletion : Hit <TAB>

Hit <TAB> for autocompletion so you don’t have to remember verbose commands. If there is an autocompletion available, hitting <TAB> will replace the current text in the command box with the autocompletion text.

Examples:

-

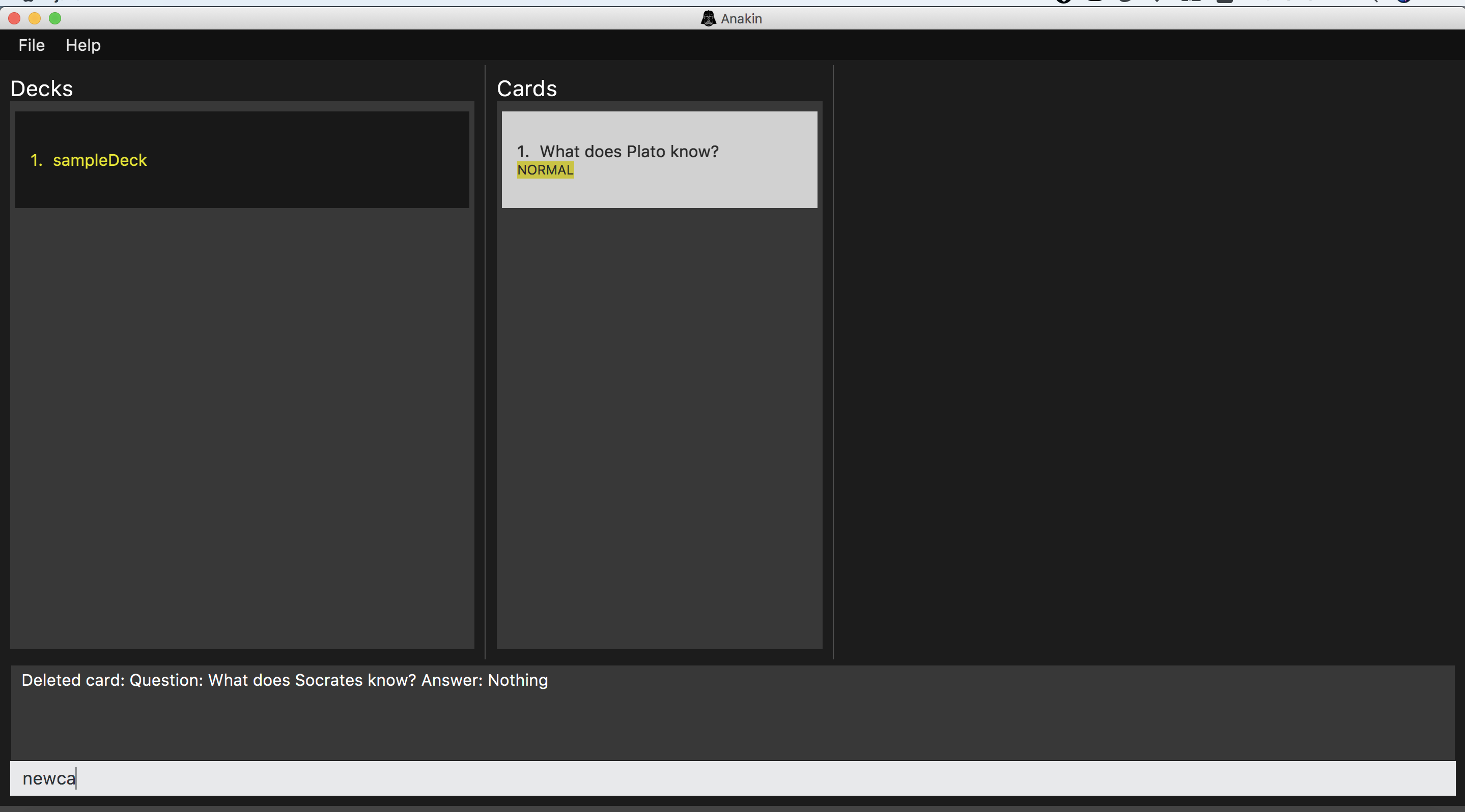

Suppose you are attempting to create a new card.

Image: Partially completed newcard command.

-

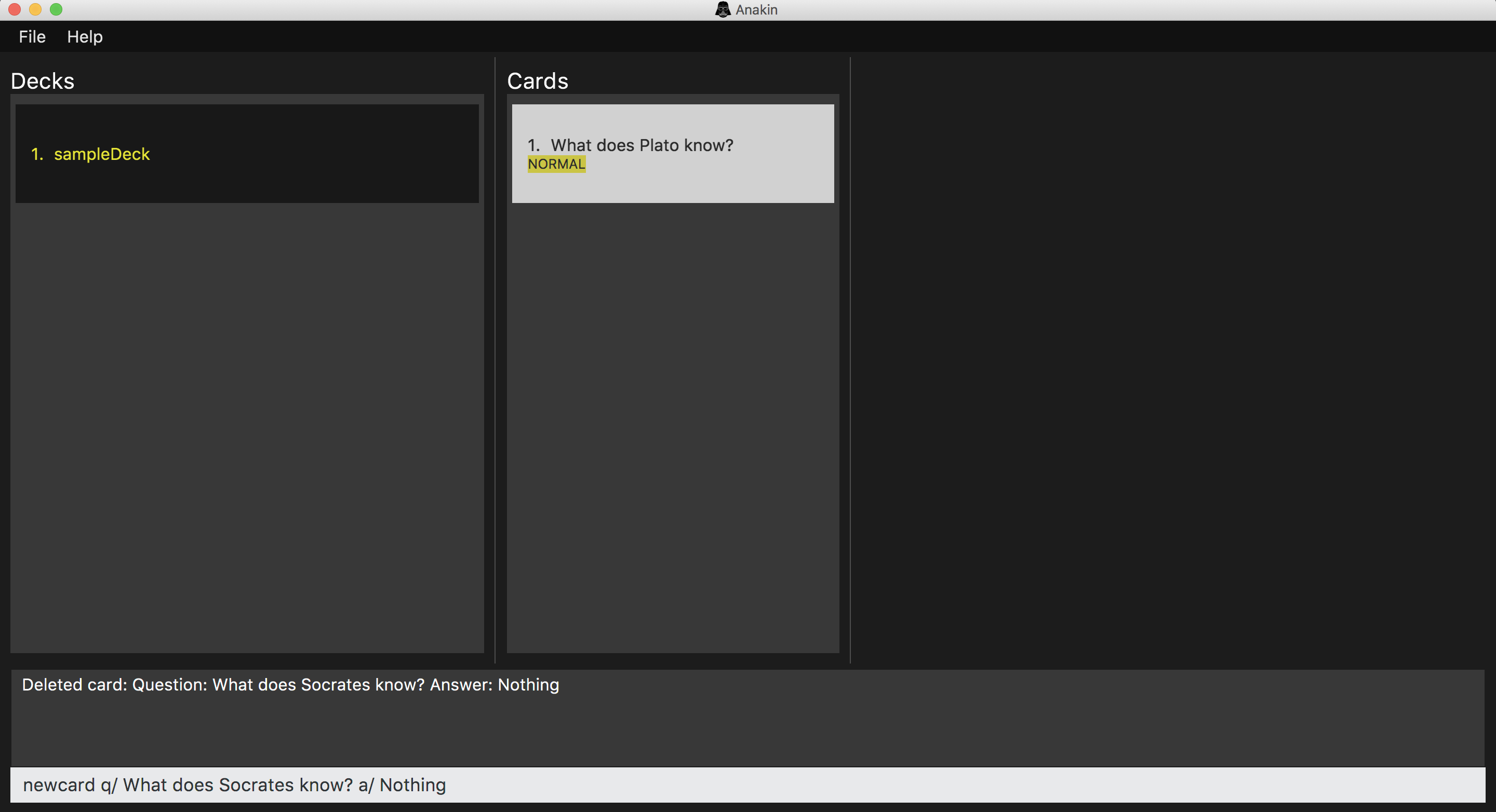

Just hit <TAB> and you will instantly get the desired result

Image: After pressing <TAB> the command is automatically completed!

|

If the command box is empty, autocompletion will default to |

|

This feature does not work when trailing or preceding whitespace is included in the command. |

Rank : rank

Use this command to sort all cards by a user’s cumulative performance score on each card.

Format: rank

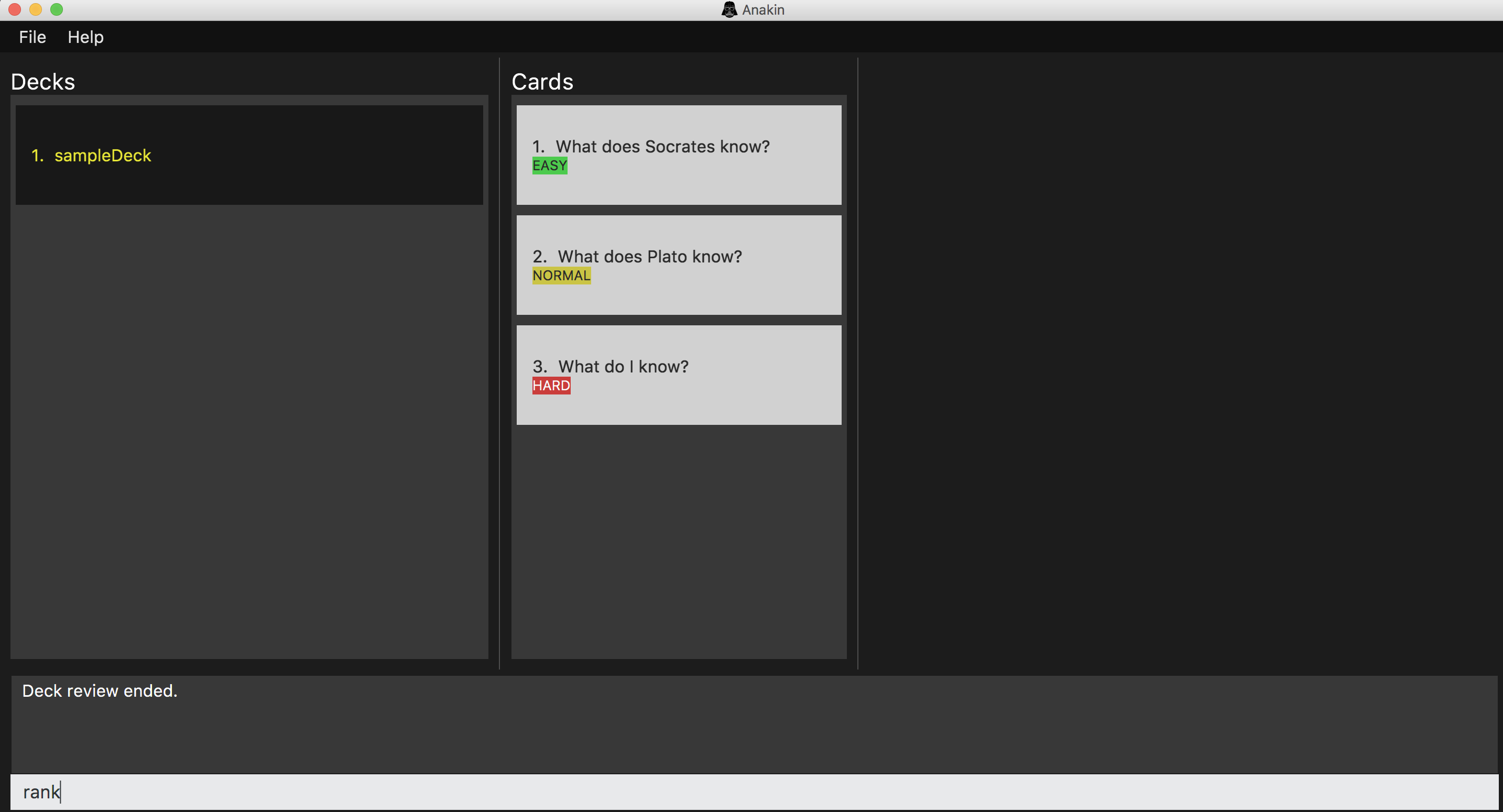

Examples: Suppose you are studying for an important exam, and you want to find out which cards you performed poorly on so you can review them.

-

Classify cards as described in the

classifysection above and then typerank

Image: Appearance of cards before sorting by performance

-

Type

rankand you will instantly get the desired result

Image: Appearance of cards after sorting by performance. As can be seen, harder cards move to the top.

The indicator in the card panel only shows the most recent classification of the card while the scheduling algorithm takes into account all past reviews. As such, cards labelled HARD may not always be above cards ranked NORMAL. |

Contributions to the Developer Guide

Given below are sections I contributed to the Developer Guide. They showcase my ability to write technical documentation and the technical depth of my contributions to the project. |

Autocomplete Implementation

Current implementation (v1.4)

The logic behind autocompletion is handled by an Autocompleter class.

On pressing <TAB>, Command Box will raise an event and check if the current text in the command box is autocompletable, that is to say, it is a prefix of one of the existing commands supported by Anakin.

If it is Autocompletable, Autocompleter will search through the list of existing commands in lexicographic order and find the first match for the current text in the command box.

Design considerations

-

Alternative 1 (current choice): Use a set of pre-decided completion text for each command and have a list of all supported command words

-

Explanation: Each command has a pre-decided

AUTOCOMPLETE_TEXTfield and we do prefix-matching between the text in the command box and our existing set of command words. If there is a potential match, we replace the current text in the command box with theAUTOCOMPLETE_TEXTof the supported command which it is matched to. -

Pros: Provides better modularity by decreasing the depndency of autocomplete on external components (Current Implementation)

-

Cons: Less personalisation to each user. This design doesn’t take into account the past commands that the user has issued.

-

-

Alternative 2: Match current command against the history of previously executed commands

-

Pros: Better personalisation of each command

-

Cons: Worse modularity and separation of concerns as the autocompleter would need to interact with the history. As such, it might increase coupling between the autocomplete and history components.

-

How to add autocompletion for new commands

-

Should there be a need to include new commands, you can follow the following steps to ensure your command can be autocompleted.

Example Scenario: Suppose we just introduced the undo command and we wish to integrate it with the autocompleter.

Step 1 : Add the AUTOCOMPLETE_TEXT field to the class. This will decide what the command autocompletes to when the user presses tab.

Step 2 : Add the class to Autocompleter java

With that, you’re good to go!

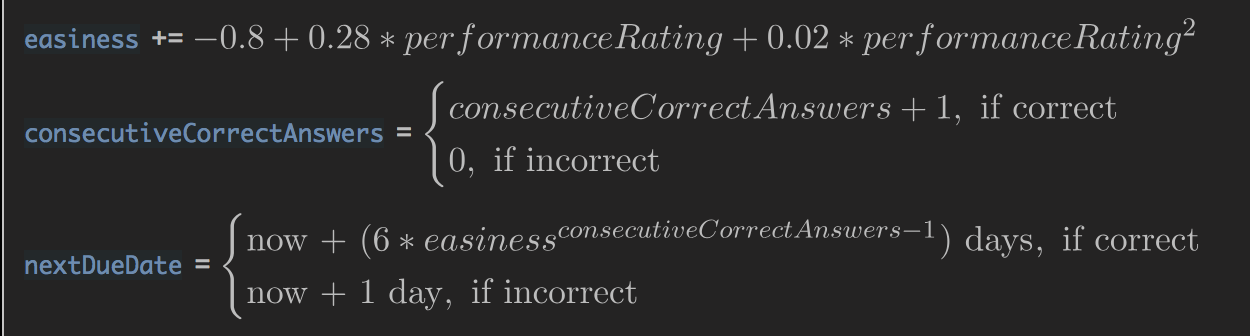

Card Scheduling implementation

Current implementation (v1.4)

The logic behind scheduling is handled in Card.java. Each card contains reviewScore and nextReviewDate fields which determine how well the user remembers the card and when the user should review the card again respectively.

When each card is classified using classify, it’s score is adjusted according to a modified version of the Super Memo 2 algorithm which we have detailed below. The review score is then updated, and using this review score

we calculate the number of days to add to the current review date.

Design considerations

-

Alternative 1 (current choice): Implement the scheduling update by attaching the scheduling process to the card class

-

Pros: As only cards are meant to be scheduled, this will increase cohesion by strengthening the relationship between cards and the scheduling process.

-

Cons: Should there be a need to implement scheduling of decks, then we will need to refactor our code for scheduling.

-

-

Alternative 2: Create a separate scheduler class to handle performance operations.

-

Pros: This will allow us to schedule multiple type of objects.

-

Cons: This will decrease cohesion of the components as we only intend for cards to be scheduled in this application.

-

Scheduling Algorithm details

-

The variant of the Super Memo 2 algorithm which we have implemented in this application works as follows:

Image : A description of the Super Memo 2 variant we implemented. Formula taken from Blue Raja’s blog

Performance Rating:

How the user assesses his/her performance during classify.

easy corresponds to a 0 before scaling

normal corresponds to a 1 before scaling

hard corresponds to a 2 before scaling

Easiness Score : This corresponds to reviewScore in our implementation

As we only have three levels of difficulty, we have added a BIAS term to scale the reviewScore.

Additional Information

For more information about me and to view more of my works, please feel to check out my website here